Computer Vision-Based Basketball Coach

OpenCV, Python, Computer Vision, Object Tracking

Overview

This project uses computer vision to analyze, score, and provide feedback on your basketball shot, effectively functioning as a virtual coach.

The program tracks the ball’s trajectory and your body’s movement, comparing these to a ground truth (a “perfect” shot). It then calculates a performance score and generates a PDF report with specific feedback.

Teammate: Srikanth Schelbert

GitHub: https://github.com/henryburon/cv_basketball_trainer

Contents

Motion Tracking

The first step is to collect the data that is to be analyzed, compared, and scored.

Basketball Tracking Algorithm

I was primarily responsible for developing the algorithm that identifies and tracks the basketball through the video.

Algorithm: apply color mask » find contours » score contours » identify basketball

Applying the HSV color mask and identifying the contours with OpenCV is a straightforward process. The objective is simply to reduce the amount of potential basketball contours in the frame and therefore make the scoring process easier.

The contours are scored on:

- Size

- Squareness

- Distance to the basketball’s location in the previous frame

Each contour in the frame is graded on the above three characteristics, and each of those grades are weighted and summed up to create a single score for the contour. The contour with the highest score is assumed to be the basketball, and its coordinates are saved into the trajectory array.

Figure 1. Scored contour rectangles. Green outline indicates basketball. Red circle is location in previous frame.

Figure 1. Scored contour rectangles. Green outline indicates basketball. Red circle is location in previous frame.

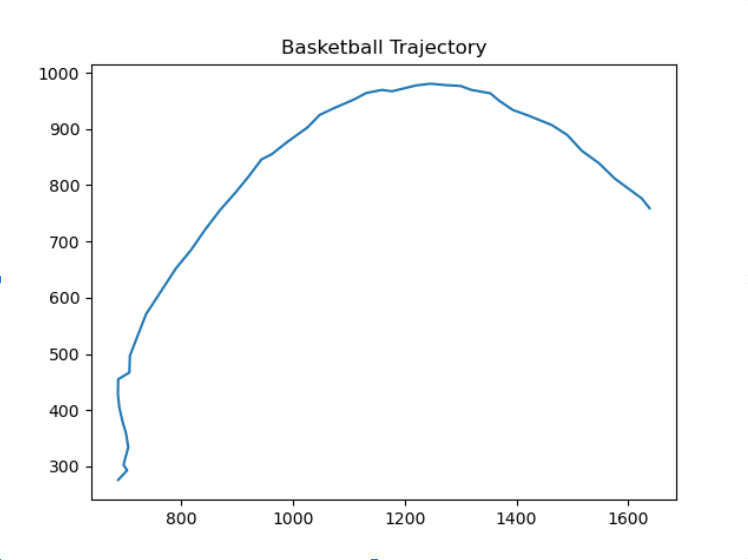

Figure 2. Sample collected basketball trajectory data.

Figure 2. Sample collected basketball trajectory data.

Body Movement Tracking

We used MediaPipe to track the body during the shot.

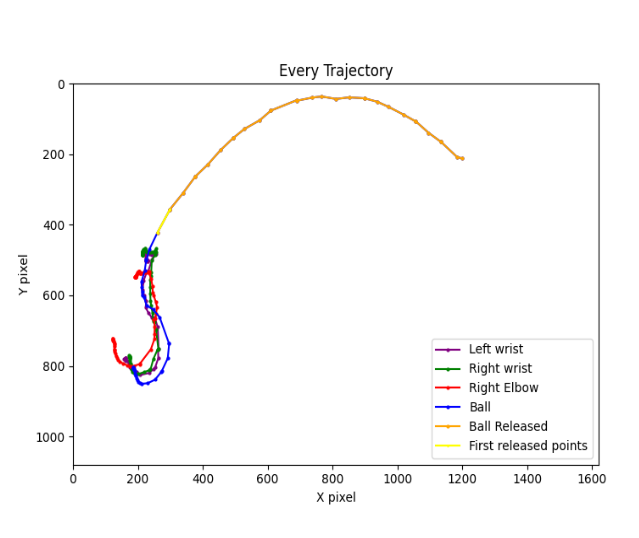

Specifically, we tracked the motion of the wrist and elbow, as their movement greatly influences a shot’s success.

We combined this data with the basketball’s motion and were then able to identify the moment the ball was released from the player’s hands.

Data Analysis and Scoring

In order to score the shot, we compared its similarity to a ground truth/perfect shot. If you’re curious, we compared it to Steve Nash’s shot, a former professional NBA player.

To evaluate the trajectory similarity between the user’s shot and Nash’s shot, we used Fast Dynamic Time Warping (FastDTW) and Procrustes analysis.

FastDTW aligns two time-series data, even if they are not perfectly synchronized in time. This allowed us to compare the similarity of the two shots, regardless of how fast or slow they were taken.

Procrustes analysis focuses on comparing the shape of the trajectories themselves, not how they were executed in time. It removes the differences in position, scale, and rotation, which is useful given that the videos are often taken with different frame sizes.

The result is that these methods allow us to compare the general shape of the trajectory and not worry about differences in timing or video quality.

Results

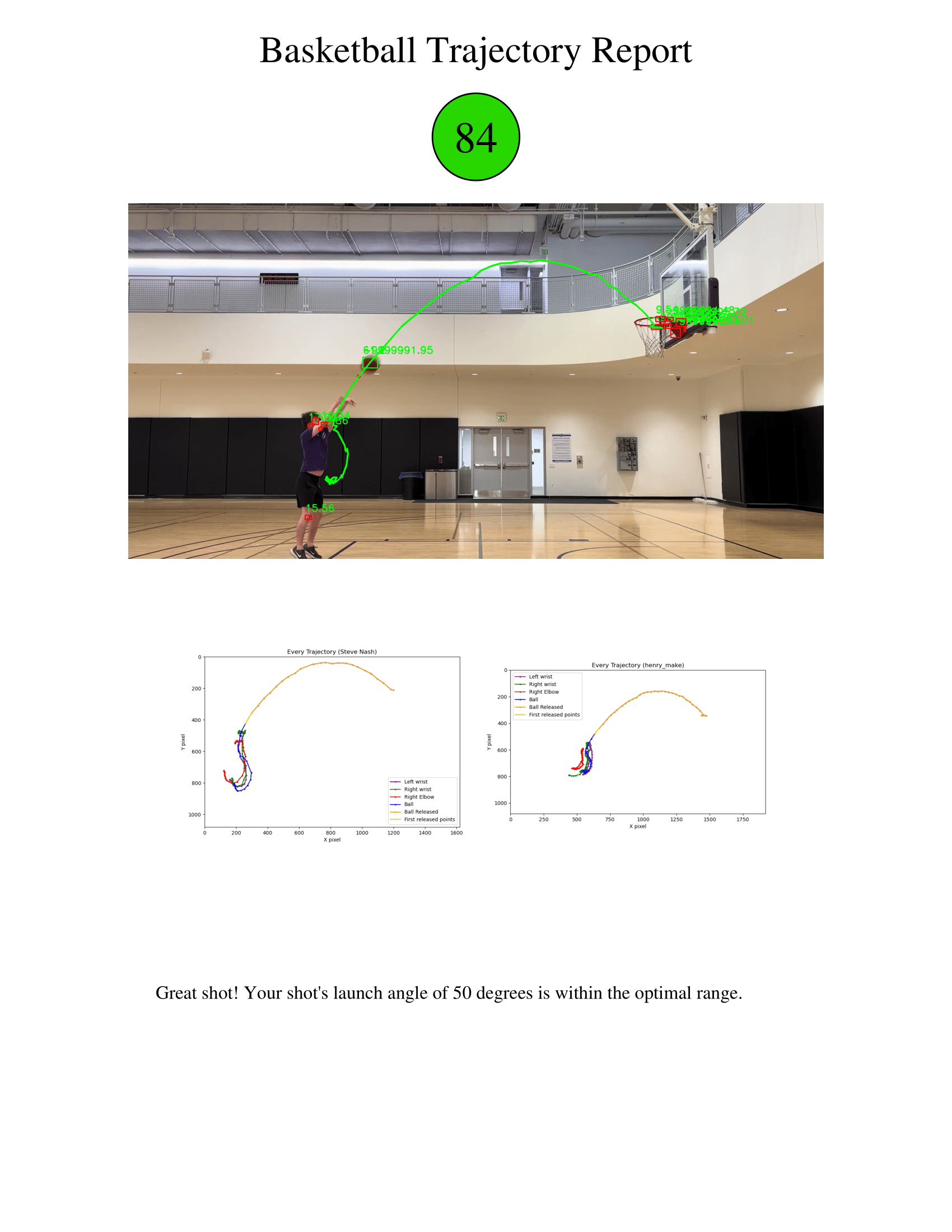

The result of the program is an automatically generated PDF report with personalized feedback.